🌍 Mistral AI: Training AI Models Pollutes More Than Building Data Centers

-

Think typing “please” into ChatGPT is harmless? Turns out, it might cost the planet more than you think.A new study by Mistral AI, the French startup behind several high-performing open-source models, reveals that training and running large language models (LLMs) consumes more resources and causes more emissions than building the physical data centers they run on.

Key Stats From the Report:

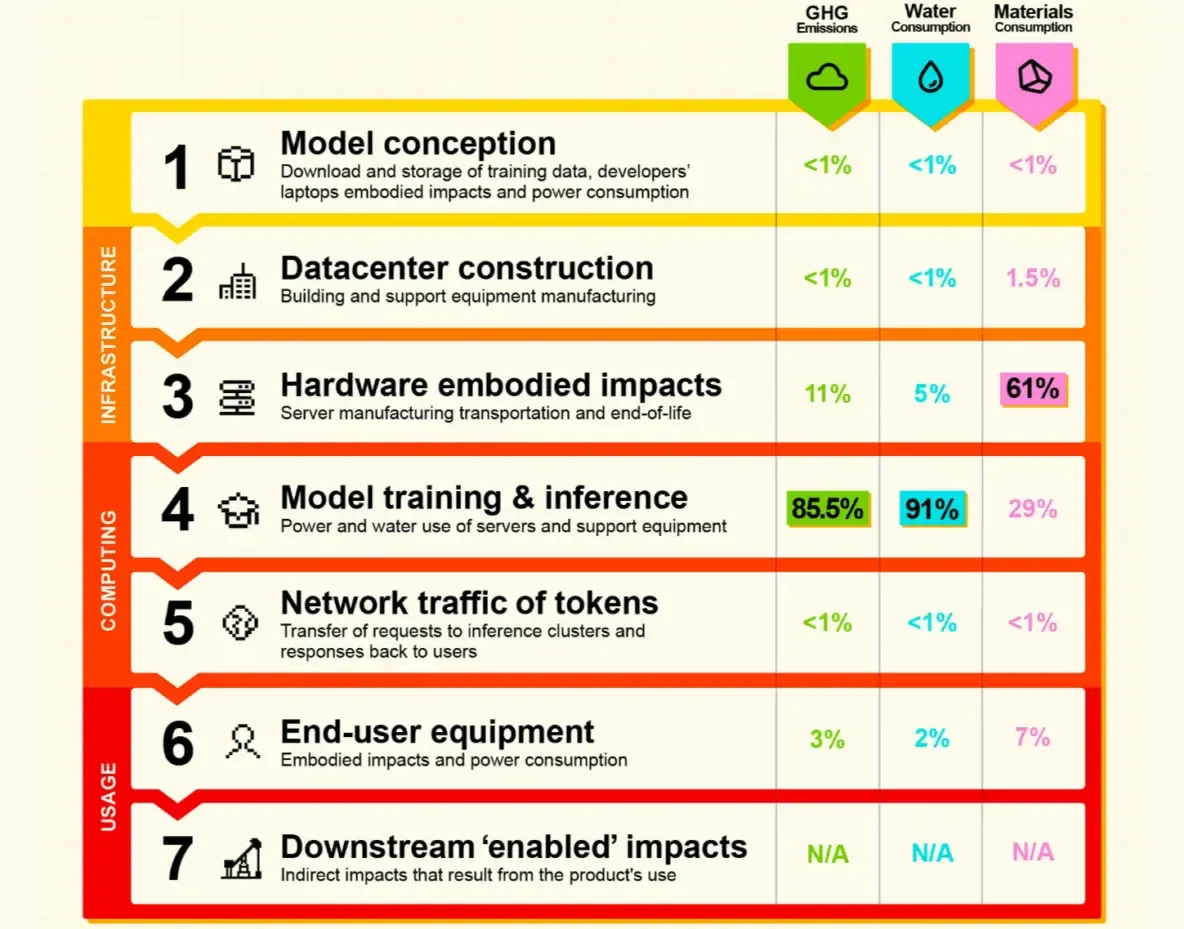

Key Stats From the Report:Their Mistral Large 2 model (123B parameters) emitted 20.4 kilotons of CO₂ over its 1.5-year training period — that’s equivalent to 4,500 cars running for an entire year. It also consumed 281,000 cubic meters of water — about 112 Olympic swimming pools. 💧 91% of total environmental cost comes from training and inference, not building hardware or shipping servers.🤯 Per User Prompt:

Each time you ask Mistral’s chatbot a question:

You generate 1.14g of CO₂ You consume 45ml of water (that’s about 1.5 shot glasses 💦)Compare that to OpenAI CEO Sam Altman’s claim earlier this year: ChatGPT uses “roughly 1/15th of a teaspoon of water per request.” Either way, multiply that by billions of prompts — and it adds up fast.

🧠 Why This MattersWhile we’re all excited about AI’s potential, this study puts things into perspective: language models are not “clean tech.”

They burn real-world resources — and with demand only growing, so will their environmental footprint.

Forum question:

Forum question:What should we do about this?

Should LLMs prioritize energy efficiency as much as accuracy? Is open-source AI (like Mistral’s) the solution — or does it just spread the environmental cost more widely? Could crypto-style proof-of-work/demand incentives help regulate AI usage? Drop your thoughts below — and maybe say "please" and "thank you"... just not too often

Drop your thoughts below — and maybe say "please" and "thank you"... just not too often

-

This really highlights the hidden cost behind each "simple" AI query. I think energy efficiency should absolutely be a top priority — just like accuracy and safety. We're not going to scale this tech sustainably unless we start optimizing for resource use from the ground up.

-

Proof-of-demand or usage-based throttling could work, similar to how carbon credits are used in other industries. It wouldn’t stop development but could encourage responsible deployment. At the very least, we need to make users more aware of the environmental cost behind each prompt.

-

Open-source AI is great for transparency and innovation, but it does come with a trade-off. The more accessible it is, the more people will run these models — and that means more emissions overall. Maybe it's time we start thinking about limits or incentives to encourage efficient usage.