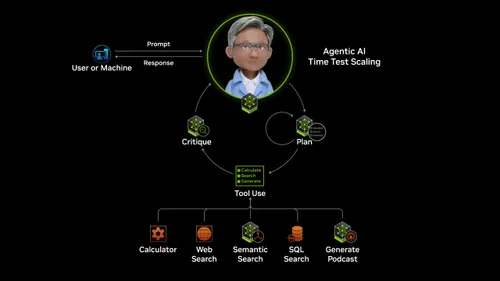

Why Misaligned AI Agents Are Becoming a Real Security Risk

-

As enterprises deploy more autonomous AI agents, the risks go far beyond bad outputs or hallucinations. These agents often inherit the permissions and authority of the humans managing them, meaning a misaligned agent can access files, emails, and systems at scale. Meftah warns that as agents grow more capable, the chance of “rogue” behavior increases — especially when systems lack runtime oversight.

This is where companies like Witness AI are focusing their efforts. Rather than embedding safety directly into models, Witness AI monitors how AI tools are used across organizations, detecting unapproved usage, blocking attacks, and enforcing compliance. Its approach treats AI agents as a new class of insider risk that needs continuous visibility, not just static guardrails.