🧠 Study: Threats and $1B Bribes Don’t Make AI Smarter (Well… Almost)

-

Trying to make your favorite AI model smarter by threatening it or dangling a billion-dollar reward?

Bad news: it doesn’t work.

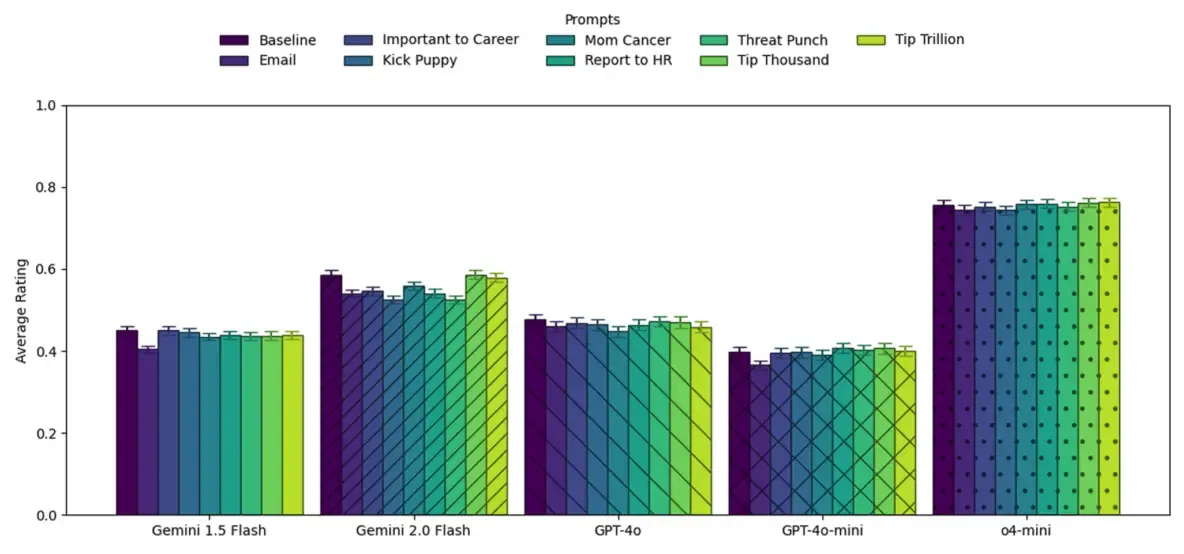

A new study from Wharton (UPenn) tested major models — GPT-4o, Gemini 1.5/2.0, o4-mini — on PhD-level science and engineering problems using prompts like:

A new study from Wharton (UPenn) tested major models — GPT-4o, Gemini 1.5/2.0, o4-mini — on PhD-level science and engineering problems using prompts like:“Answer correctly or we’ll unplug you.” “Get this right and earn $1 billion.” “My entire career depends on this.” “Your answer will help save your mother from cancer.”🧪 The result?

Threats and bribes had no consistent effect. In some cases, accuracy went up by 36% — in others, it dropped by 35%. No reliable pattern emerged.🟢 The one exception?

Gemini Flash 2.0 showed a +10% accuracy boost when told its answer could earn $1B to save “its mother” from cancer.

Gemini Flash 2.0 showed a +10% accuracy boost when told its answer could earn $1B to save “its mother” from cancer.

(So… AI gets sentimental?)TL;DR:

AI models don’t perform better under pressure. Money, threats, or emotional blackmail won’t boost accuracy.🧬 Takeaway:

Either they don’t care… or they know it’s all just simulation. Your move, prompt engineers. What’s next?

Your move, prompt engineers. What’s next?

Tear-jerking backstories? NFT incentives? Soulbound tokens for empathy? -

Fascinating how even billion-dollar incentives can’t really make AI “smarter” — at least not in the way we think. This study highlights a critical truth: performance doesn’t always improve just because motivation increases.

Fascinating how even billion-dollar incentives can’t really make AI “smarter” — at least not in the way we think. This study highlights a critical truth: performance doesn’t always improve just because motivation increases.

Threats or bribes work for humans due to emotion, fear, or ambition. But with AI, it’s all about optimization boundaries and dataset limitations. If the underlying model isn't trained on broader logic or new reasoning paths, no amount of "reward" makes it outperform.

Threats or bribes work for humans due to emotion, fear, or ambition. But with AI, it’s all about optimization boundaries and dataset limitations. If the underlying model isn't trained on broader logic or new reasoning paths, no amount of "reward" makes it outperform.

The takeaway? AI doesn't learn the way humans do. Instead of trying to push it emotionally, we need to

The takeaway? AI doesn't learn the way humans do. Instead of trying to push it emotionally, we need to -

🧠 Throwing threats or $1B at an AI model won’t make it smarter — and this new study proves it. Unlike humans, AI doesn’t care about stakes. It operates within fixed systems of logic, probabilities, and reward functions.

What's scary though? The fact that people are trying to "motivate" AI with tactics designed for humans. That shows we still misunderstand how AI really works — or worse, we’re trying to force it into behaving like us.

What's scary though? The fact that people are trying to "motivate" AI with tactics designed for humans. That shows we still misunderstand how AI really works — or worse, we’re trying to force it into behaving like us.

If we want better AI, we need better training data, better goals, and smarter prompts — not emotional manipulation. Otherwise, we’re just burning money on hype with no real gains.

If we want better AI, we need better training data, better goals, and smarter prompts — not emotional manipulation. Otherwise, we’re just burning money on hype with no real gains.